My take on an AI website builder architecture

Backstory

When GPT first came out, I had the idea of building a fully AI-powered website builder — where users could generate and edit sections just by giving natural language instructions.

I managed to build a working version of the app, but I ended up discontinuing the project because the websites it generated didn’t meet the design quality I was aiming for.

Still, it was a really fun experiment. There were some interesting problems around how AI could manipulate and edit websites at a structural level. The app had two main parts:

- A custom DOM tree renderer

- A GPT-powered instruction interpreter that performed edits on that tree

Architecture Overview

Everything in the app is represented using a custom DOM tree — not raw HTML. This gives us full control over structure, token usage, and editability.

A simplified version of a DOM node looks like this:

class Node {

id: string;

props: {

className: string;

style: object;

children: Node[];

};

}All GPT interactions happen within this DOM representation. Instead of asking GPT to output HTML, we reduce token usage and improve structure by having it generate structured edit commands. This also helps avoid malformed or broken tags when generating large sections.

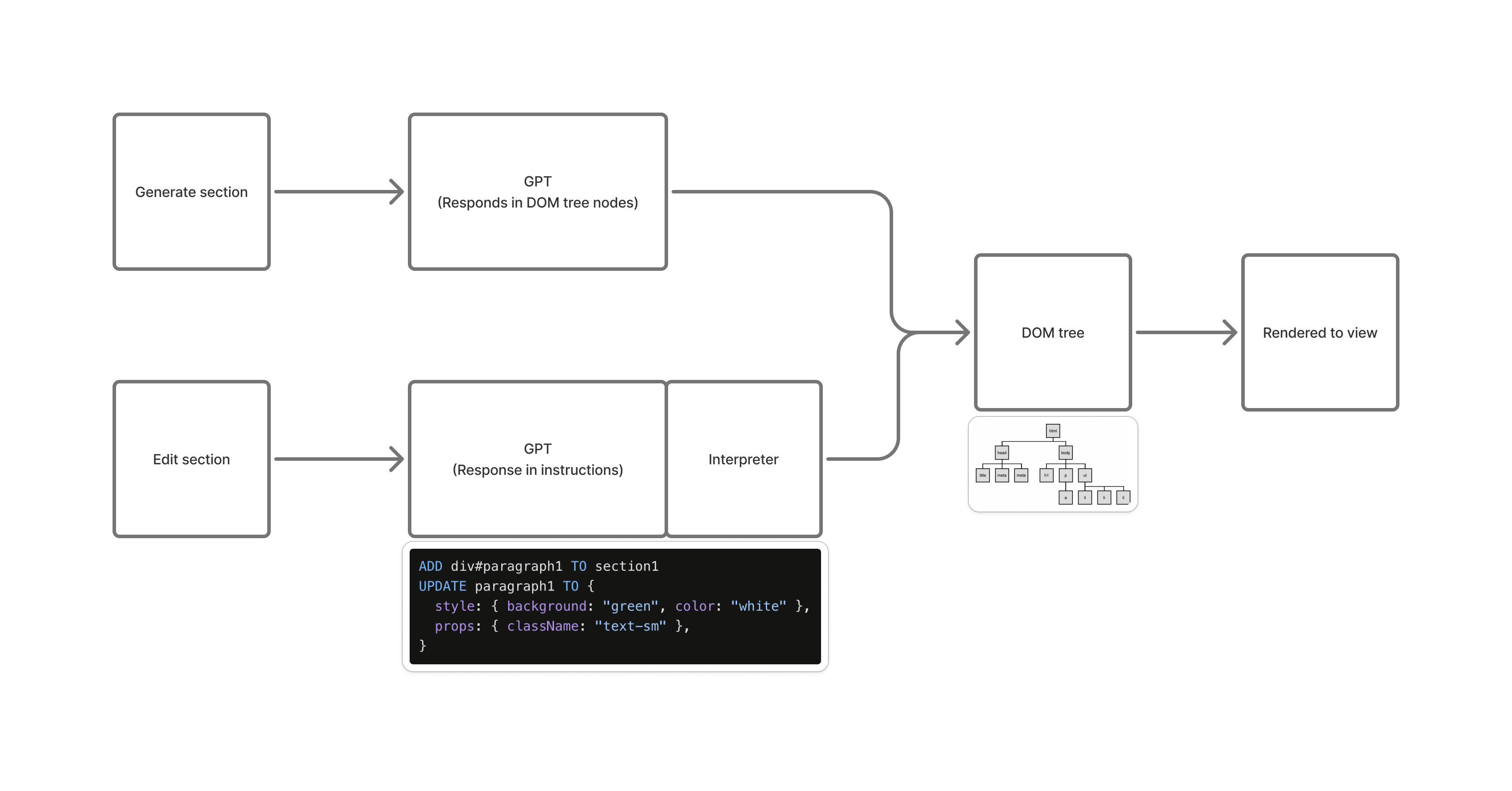

Section generation

Section generation works by asking GPT to return a structured DOM subtree, which we validate and append to the main DOM tree. This gives us full control over how new sections are added and rendered.

Section editing

Early on, GPT’s limited context window made editing hard. For large sections, the model couldn’t fit the whole HTML, and returning a regenerated version of the full section was:

- Slow (10–30 seconds)

- Error-prone

- Inefficient

The Fix: A Mini Instruction Language

To solve this, I designed a simple SQL-like instruction language for editing the DOM tree:

ADD div#paragraph1 TO section1

UPDATE paragraph1 TO {

style: { background: "green", color: "white" },

props: { className: "text-sm" },

}GPT’s job became translating user input into this instruction set. Then, we ran it through a small interpreter that tokenizes each command and applies the changes to the DOM tree.

Now, instead of regenerating entire sections, the app only sends a lightweight abstracted DOM tree and receives a list of edit instructions. This dropped edit latency from tens of seconds to nearly instant.

Final flow

The Problem I Haven’t Solved

The biggest limitation: GPT has no design sense. It doesn’t “see” or “understand” spatial layout, color harmony, typography, or aesthetic principles. So the generated websites often just… look bad.

LLMs lack spatial attention — they’re not trained to understand visual balance. I think that if AI-generated websites are ever going to look good, the core engine probably needs to be something like a diffusion model or another spatially-aware system — not just GPT.

If you’re working on anything similar — or just curious about how this works under the hood — feel free to shoot me an email at nathanluyg@gmail.com.