Insights from building ComfyUI Cloud

Backstory

While building a lot of AI image generation apps, I wanted an API to prototype faster — something more flexible than Hugging Face. I built an early, very amateur execution engine and eventually discovered ComfyUI, which was far more complete.

This led me to experiment with turning ComfyUI into a developer-friendly cloud API. What started as a tool for myself turned into a serious attempt at a startup.

Below is a breakdown of the experiments, technical work, and lessons from a six-month sprint.

Growth & Retention

Over six months, I launched a series of projects, tested different growth strategies, and learned from each iteration.

Launch Strategy

My feedback loop was simple: Build for a week → Post to Reddit → Measure impact (signups, usage, feedback)

Here’s a timeline of launches:

| # | Title | Views / Likes | Link | Date |

|---|---|---|---|---|

| 1 | Run Comfy locally, with a cloud GPU | 25k views | Feb 16, 2024 | |

| 2 | ComfyUI Pets | 13k views | March 3, 2024 | |

| 3 | ComfyUI Pets v2 | 21k views | March 18, 2024 | |

| 4 | ComfyUI Cloud launch (different CTA) | 13k views | March 24, 2024 | |

| 5 | ComfyUI Cloud launch (CTA test again) | 3.9k views | May 31, 2024 | |

| 6 | Workflow catalog (comfyui-cloud.com launch) | 15k views | June 21, 2024 | |

| 7 | Comfyworkflows w/ reverse image search | 13k views | June 28, 2024 | |

| 8 | Workflow virus checker | 7.7k views | July 2, 2024 |

Notes:

- ComfyUI Pets was a side project to drive traffic and turned into a fun hobby.

- Workflow catalog was aimed at SEO, and worked well until Google updated their ranking system.

- Virus checker was launched right after a real ComfyUI node installed malware — I used this moment to differentiate my service.

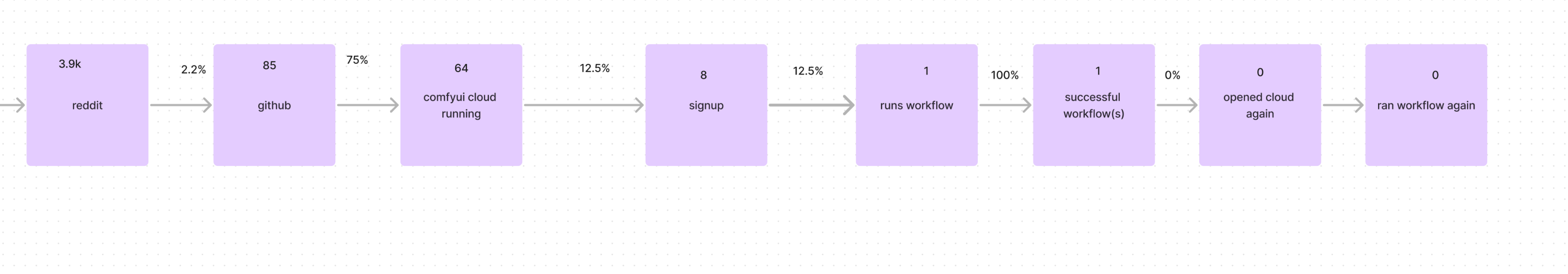

Even my worst-performing post (May 31) still gave me enough data for useful funnel analysis:

Why It Didn’t Stick

Despite steady traffic and some paying users, retention was poor. Most users stopped using the app shortly after trying it.

The main reason: ~50% of users ran into errors on their first run. I spent a long time debugging and patching edge cases, but ultimately the technical overhead was too large for a solo developer.

There was also no organic growth. I realized that to get word-of-mouth traction, I either needed:

- An unbelievably smooth experience, or

- First-mover advantage

I had neither. Even competitors with similar bugs grew organically just by being early.

User Research

I interviewed users over Discord, especially in the CivitAI community, and also did live debugging sessions while asking questions.

What I learned:

- Most users have ~10GB VRAM (e.g. Nvidia 1080)

- The biggest use cases for cloud were:

- Upscaling

- Video workflows (like AnimateDiff, Stable Video Diffusion)

- VRAM intensive workflows (SDXL, etc)

- Speed was important, but not a dealbreaker — reliability mattered more

This helped me refocus my efforts on GPU-intensive workflows rather than just general image gen.

Engineering

The first MVP was a fork on bennykok/comfyui-deploy, but after a week I rebuilt the entire backend to better fit my needs.

Problems I Had to Solve

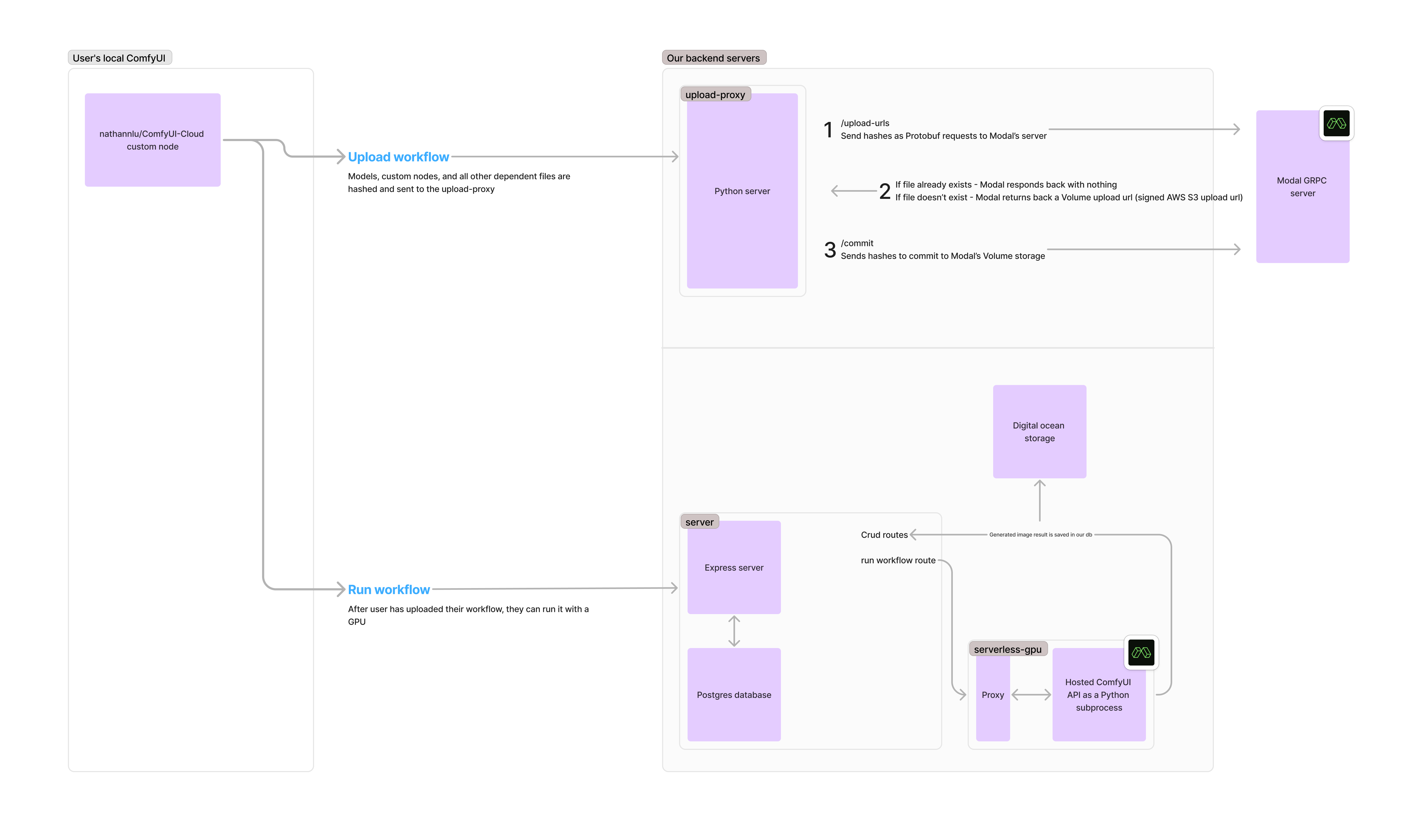

Secure model uploads (w/ Modal)

I used Modal as my serverless GPU backend, but ran into a challenge: users needed to upload large models from their local machine to Modal’s volume storage — without giving them access to my Modal account.

Modal’s default method requires authenticating via the CLI with a secret key, which grants full access to all volumes — obviously not secure for end users. Modal doesn’t officially support anonymous or user-scoped uploads.

However, after digging into Modal’s client codebase, I discovered they handle file uploads by generating S3 pre-signed URLs, which are validated via their gRPC API. These URLs let clients upload directly to storage without exposing any secrets.

I reverse-engineered that flow and integrated it into my system, allowing users to securely upload models from their browser or local ComfyUI — no secrets, no CLI setup, and no risk of exposing privileged access.

Custom nodes with Python dependencies

Initially, I tried creating and uploading a pre-built Python environment (venv) to Modal’s volume. But this approach hit a wall: virtual environments contain thousands of small files, which quickly ran into Modal’s volume storage and file count limits.

On top of that, Modal doesn’t support dynamically modifying the container image (i.e. no Dockerfile-style customization at runtime). So I couldn’t just bake user-specific dependencies into the worker image.

Rather than trying to maintain separate environments for each user, I switched to a dynamic install strategy: Every time a serverless GPU worker starts, it installs the required Python packages on the fly.

To keep this fast, I used uv, an extremely fast Python package manager written in Rust. This let me spin up environments with fresh dependencies in seconds, without blowing past Modal’s limits or compromising on flexibility.

Final Architecture

Final Thoughts

I learned a lot from this project. But ultimately, I never solved the core problem well enough. Users didn’t get a stable experience.

In hindsight:

- I over-invested in flashy features (like syncing local ↔ cloud)

- I didn’t double down hard enough on reliability and error handling, which ended up being the biggest factor in retention

If you have questions about anything I’ve built here — the tech, the launches, the architecture — or just want to chat about generative AI, feel free to shoot me an email at nathanluyg@gmail.com.